How GPUs Work

Preface

I've been in the Machine Learning space for a while now and it seems like GPUs are thought of as stuff of magic. In this post, I'll try breaking down the intuitions behind acceleration hardware and how it powers the training and inference processes.

Contents

Throwback to CPUs

Registers

Instruction Cycle

Structure

Enter GPUs

Parallel Computation

GPUs in the Machine Learning Context

Vectorisation

What would CPUs do

Parallelised Neural Networks

GPUs over CPUs

Conclusion

Throwback to CPUs

You can skip this part if you already know what's going on.

Before we dive into GPUs, it's wise to understand how a CPU works.

The Central Processing Unit is essentially the brain of the computer.

It performs basic logic calculations like arithmetic and handling input/output. The CPU's fundamental purpose is to execute a sequence of instructions (called a program).

A CPU has the ability to perform fundamental operations that allow it to achieve different results based on what a program asks it to do. These programs are stored in the computer memory (taken from the disk and stored in the RAM, usually) until they are compiled/interpreted and run.

Before I get to these operations, let's talk a bit about Registers.

Registers

Registers – more formally known as Processor Registers – are temporary "pockets" of computer memory the CPU uses to store and transfer data or instructions being used immediately.

These registers can hold whole instructions, sequence of bits/bytes, memory storage addresses, or any data for that matter. As one can assume, the CPU needs to quickly access any register at any given time.

Now that we understand, at a high level, what a register is, let's look at the various operations the CPU performs when running a program.

Instruction Cycle

Let's say you're preparing a feast for your family dinner tonight. We'll go about it in a similar way a CPU would.

Fetch

This is when the CPU accesses a program line-by-line from the RAM. The current command is stored in something called the Program Counter (PC), a type of register that stores the memory address of the next instruction to be run, as soon as the current instruction is completed.

The PC keeps track of where the CPU is when running a sequence of instructions.

It's like your location pin on Google Maps when you're taking a route from home to supermarket. The CPU refers to the PC when moving from one instruction to another, like you would when navigating from one landmark to another enroute.

The PC then temporarily stores the incoming instruction in the Instruction Register (IR) and points to the next instruction in the sequence.

Decode

Here, the current instruction stored in the IR is interpreted. The IR stores this instruction as it is loaded, decoded, and finally executed. When decoding the low level machine code, the specific instruction involved in the instruction are retrieved from memory, and CPU resources are allocated to execute it.

This is similar to preparing the kitchen before cooking a meal. You take our all the utensils, bowls, raw food products, and place them on the counter. You'd probably even start pre-heating the oven.

The decoding step is very similar to this – we're preparing the CPU to execute the instruction.

Execute

After decoding, the instructions are sent as signals to the appropriate parts of the CPU (more on this later) for execution. Sometimes, more registers are used to read the data, send them to the relevant parts of the CPU for further processing, and storing data back into other registers.

This step is analogous to actually preparing the meal using the raw ingredients and cooking implements. You follow the recipe (aka, the instructions) line by line, performing operations on the food using the utensils.

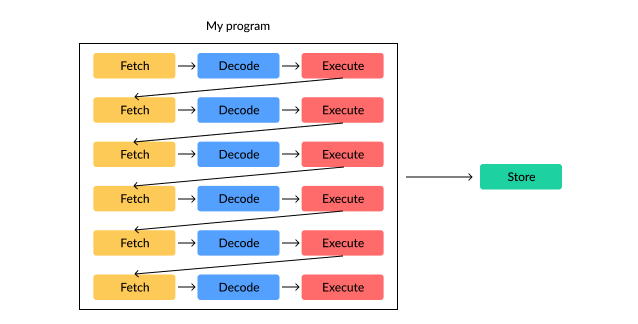

Altogether, these 3 steps are called the Instruction Cycle. This cycle takes place to ensure every instruction gets executed without hiccups.

Store

After an instruction has been executed, the manipulated data is sent to the RAM. The PC is updated again with the new memory address of the new instruction and the loop repeats itself.

You can think of this process as storing the final dish in the fridge for long term storage (would not advise with real food, though) and later use.

This Store step usually happens once the entire program has been run. We pass the final combined results of all the instructions into memory.

Structure

Within the CPU are several components that take care of these operations in the Instruction Cycle. These refer to actual hardware bits responsible for the processing and execution of instructions.

ALU

The Arithmetic Logic Unit (ALU) is responsible for well ... logic. It performs basic operation like addition, subtraction, mulitplication, division, exponentiation. The ALU takes in operands – the type of operation to be performed and the data on which to perform said operation.

For instance, when we pass something like 2 + 5 into the ALU, it discovers that we want to perform addition on 2 and 5. So, the output of the unit is 7. Deep inside the ALU, these digits are converted into binary, on which the ALU performs bitwise operations that represent the arithmetic ones mentioned above.

Control Unit

You can think of the Control Unit (CU) as the supervisor of the entire process. While a program is being executed, it instructs the different components of CPU like the ALU how to behave. It also directs various I/O devices connected to the processor. You can think of the CU as an orchestrator.

Enter GPUs

Graphics Processing Units are a whole other ball game. These are hardware units specialised with dedicated memory for specific mathematical operations (like floating point operations mainl). Before I get into what a GPU does, let's look at Parallel Computation (PC).

Parallel Computation

Given a large task, PC allows us to break into smaller tasks that can all occur simultaneously, hence the name, "parallel". The results of all these sub tasks are then synchronised and put back together to form the solution for the initial large problem.

The number of sub tasks these large problems can be broken into depend heavily on the number of cores (dark rectangles shown above) a hardware unit has. To put things into perspective, after a quick Google search, a typical CPU has between 2 to 18 cores.

Compared to this, a good GPU potentially has thousands of cores waiting to crunch really large amounts of data. It's really like giving steroids to your machine. For instance, here's a list of popular GPUs for Machine Learning and the number of cores their processor contains:

Note: Try not to compare the performance of two different generations of GPUs simply by the number of cores they have. Hardware architecture also plays a huge part in versatility.

Long story short, GPUs are devices that perform parallel computation. You can think of their cores as the dark rectangles in the diagram above, but this time, there are thousands of them instead of 5. Though, one stark difference is that GPUs have smaller cores than CPUs in terms of size.

The capacity of a GPU is usually quantified using Video RAM (VRAM). VRAM is the specialised memory used to store image data when dealing with computer graphics. It's a metric that indicates the potential performance of a GPU – higher VRAM means higher carrying and processing capacity. This ultimately means your GPU unit has quicker access to important image data as and when needed, which is imperative in the ML scene.

GPUs in the ML Context

Who doesn't like to train on a GPU? There's an almost exponential speedup in training and inference timings.

Fun fact: Deep Neural Networks fall under the category of Embarrassingly Parallel. These are trivial problems that can easily be parallelised, i.e. be broken into smaller sub tasks one by one with no extra effort, hence the name, "embarrassingly".

The reason why we can accelerate most Deep Learning operations on GPUs is because all these operations are independent of each other – none of them depend on the result of another.

Vectorisation

Legend

The trick to performing parallel processing on numbers is to treat them all like vectors. Each core will then take up the operations related to a specific row within those vectors:

Now, imagine the first two operation happening multiple times to create a Deep Neural Network with a final loss function.

What exactly do these vectors look like? Ultimately, they are columns of numbers, each row representing a new set of features or activations. Each core can take in one row from the Weight matrix, one row from the feature matrix, and one row from the Bias matrix and perform that first computation to get vector Z:

Once the results of all the computations are collected, they are synchronised, and finally put back into place in the Z vector.

What Would CPUs Do?

CPUs are known for their sequential serial processing – they do tasks one by one until all of them are completed. This is essentially why your CNN takes ages to train on MNIST 😢

A standard-issue CPU would go down the vector one row at a time, perform the dot product, add the bias and compute the final result. Then it'd take these individual results and collate them back together in the Z vector. This is unbearably slow.

This paper summarises how parallel computation helps with the speedup.

GPUs over CPUs?

A CPU is best known for being able to perform a wide variety of tasks while GPUs are for specifically focusing on one task and doing it really well. In the Machine Learning context, there's a lot of heavy number crunching taking place.

As such, GPUs can process very large datasets with ease and can run inference much faster than a standard-issue CPU (we all know this already). When dealing with real life application, data is often large, unstructured data with multiple dimensions (when dealing with image data, voxels are already considered painful to deal with).

Conclusion

The cat's out of the bag – GPUs are here to stay in Machine Learning, a field filled with complex mathematical operations like matrix multiplication, addition, and dot products, that can easily be computed using parallel processing.

While GPUs are expensive to the average practitioner (like students or junior faculty members in academia), they are a great investment and will save hours of experimentation time. I definitely recommend getting one if it's in your means. While GPUs are not replacements for CPUs, having one in your setup really does the trick.

The speedup is very real!